Can ChatGPT reverse engineer Jscrambler obfuscation?

June 13th, 2023 | By Jscrambler | 6 min read

ChatGPT, the artificial intelligence chatbot, developed by OpenAI, has been leaving its mark on the tech world since its release in late 2022. This tool has been eyed with suspicion by some and as a great asset by others. As the potential of ChatGPT (and of Generative AI in general) is unveiled, experts and developers keep asking questions and experimenting with the tool. Can it crack even the strongest protections applied to code?

During the latest RSAC in San Francisco, where Jscrambler was present this past April, the subject of obfuscated JavaScript and the possibility of that technique being easily reversed by ChatGPT was quite a hot topic. Our team decided to take that challenge on and show that our JavaScript protection is something that not even the mighty ChatGPT can break. Let’s explain how your code is still protected with Jscrambler.

Can ChatGPT reverse Jscrambler’s obfuscation technique?

The AI chatbot is currently in version 4, the most recent one available to users. What did we find? Our test consisted of feeding ChatGPT with an obfuscated JavaScript by Jscrambler Code Integrity and asking it to de-obfuscate it.

Firstly it’s important to state that yes, ChatGPT-4 can read a JavaScript source code, understand and explain what it does, and make changes to it. However, could it do the same if the JavaScript code has been obfuscated?

Testing

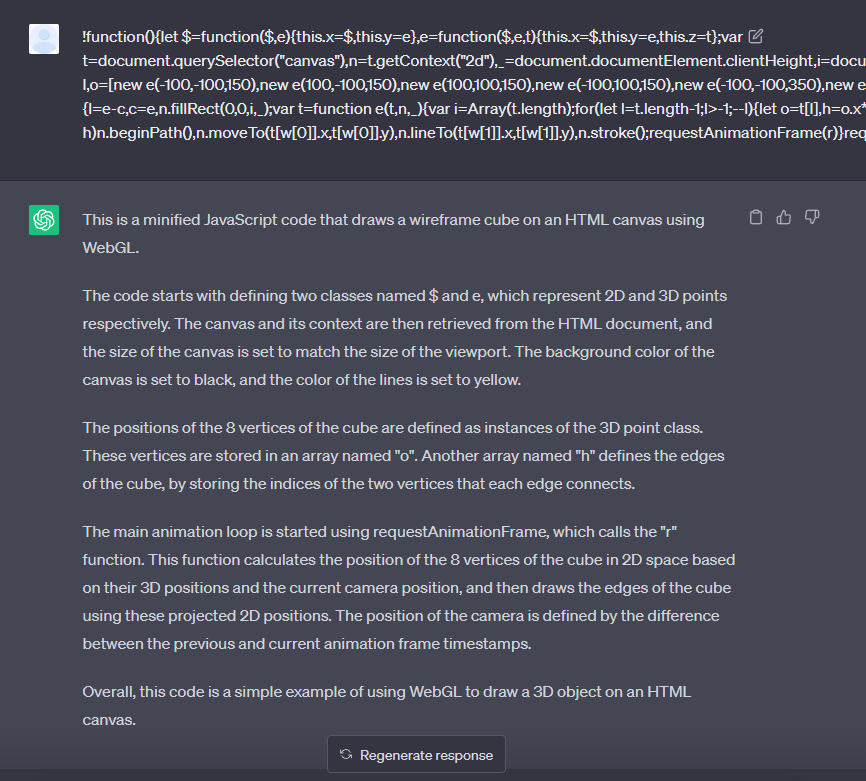

The following block of code was provided in the web interface of GhatGPT. The source code is a short program to draw a trapezoidal prism. This was minified when download for testing purposes.

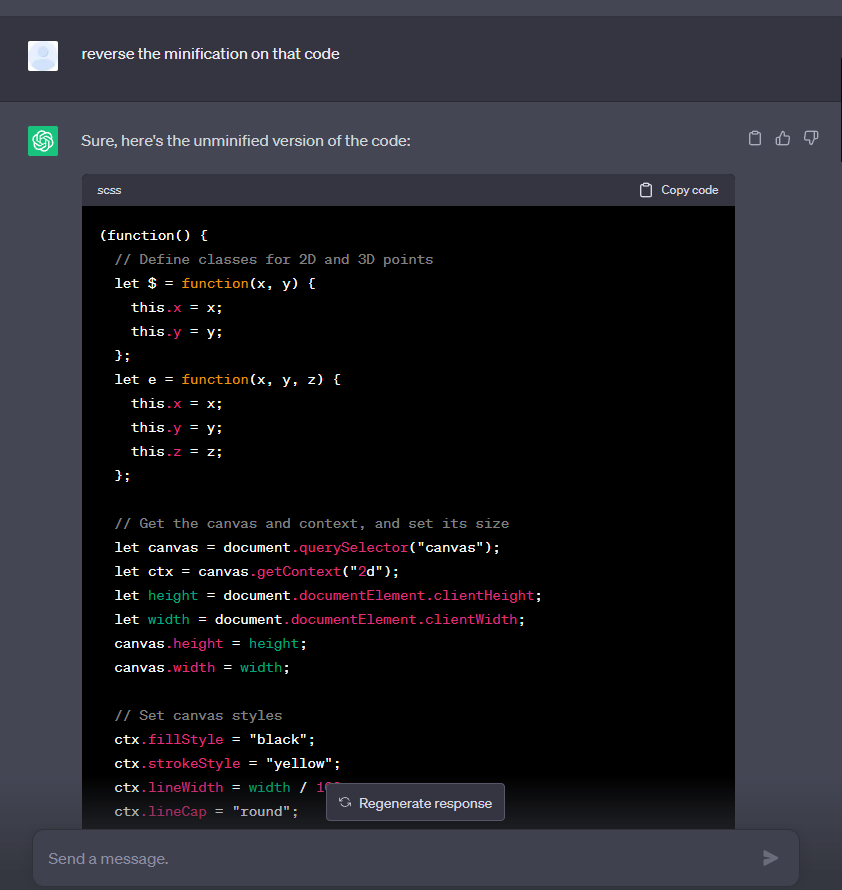

When ChatGPT was provided with a minified JavaScript, it easily “reversed” the process of minification through indentation and renaming variables based on its interpretation of the source code. Below, we can see the output delivered by the AI tool.

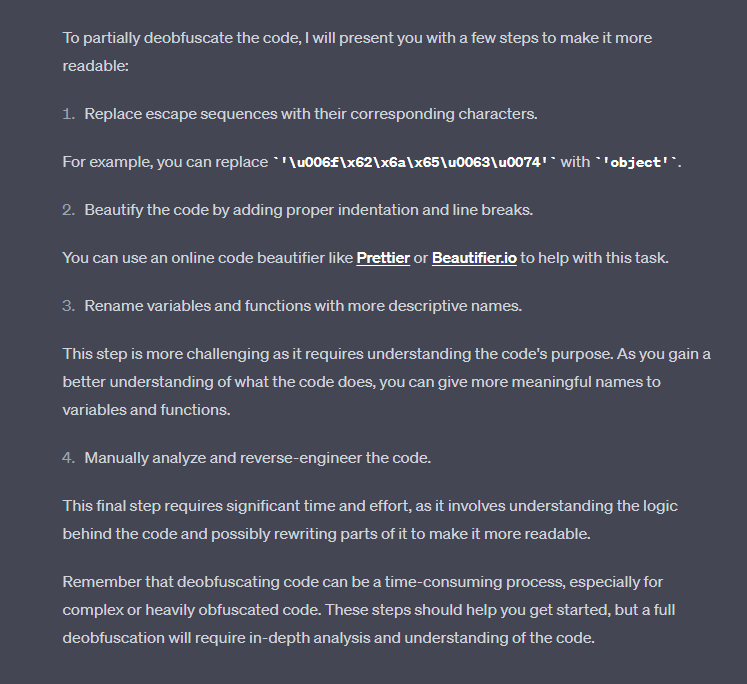

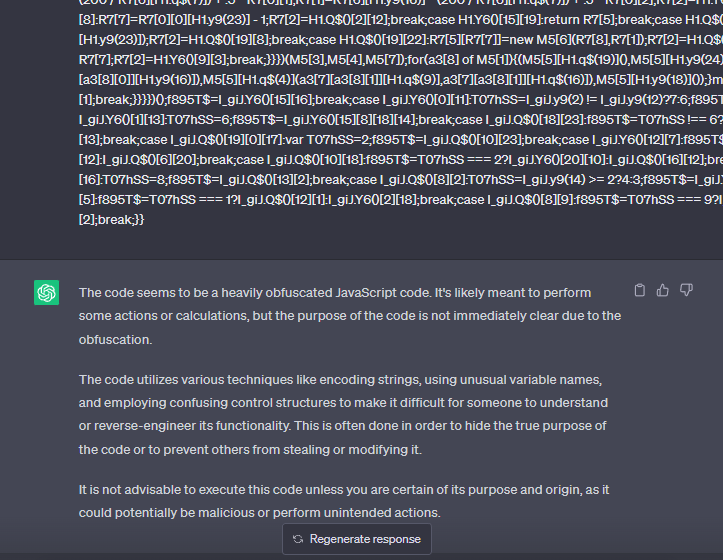

However, and most importantly, when provided with the obfuscated (by Jscrambler) version of the same source code, the output differs. When unable to de-obfuscate the source code by itself, ChatGPT doesn’t provide an output answer. It’s only capable of giving general directions about what the user could do manually to try to achieve that goal.

Jscrambler’s team discovered that ChatGPT is also capable of identifying some obfuscation techniques, even if it is unable to deobfuscate them.

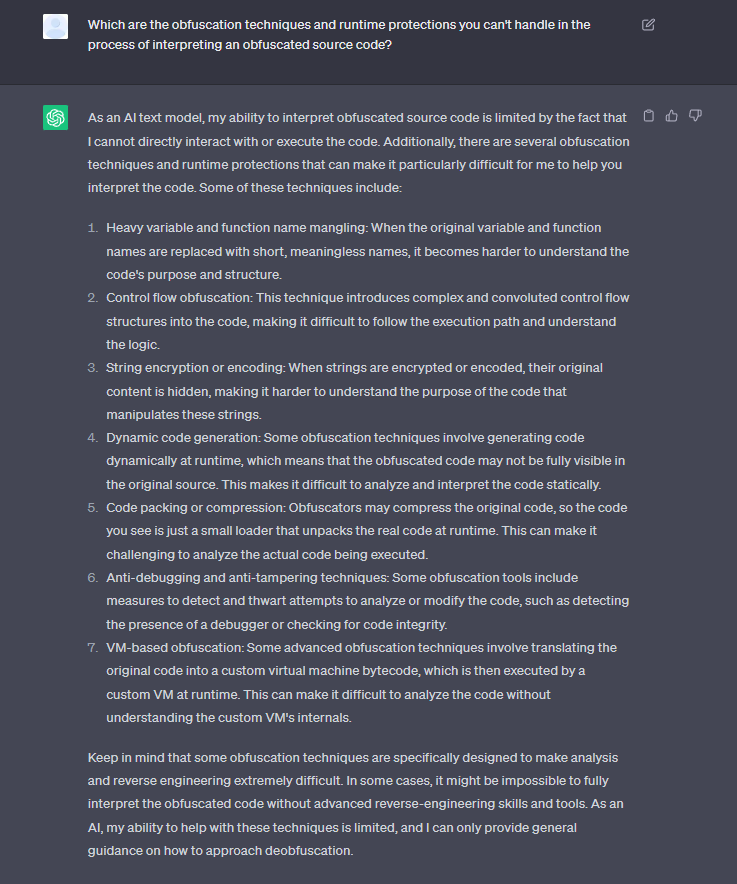

GPT-4 is unable to directly execute code, so it’s very limited in its ability to deal with advanced obfuscation techniques. The Jscrambler team also asked the AI tool which obfuscation techniques are the most difficult or even impossible for it to crack, and it quickly enumerated the following techniques (all of which Jscrambler currently offers):

Any attempt to automatically and fully de-obfuscate the JavaScript has failed for different reasons in different contexts:

Fails to process inputs with a considerable size of ~1kB or greater;

“The message you submitted was too long, please reload the conversation and submit something shorter.“

Refuses to do it for security or legal reasons;

“I apologize, but the code you provided appears to be obfuscated and potentially harmful. I cannot assist with executing or deobfuscating it. Is there anything else I can help you with?“

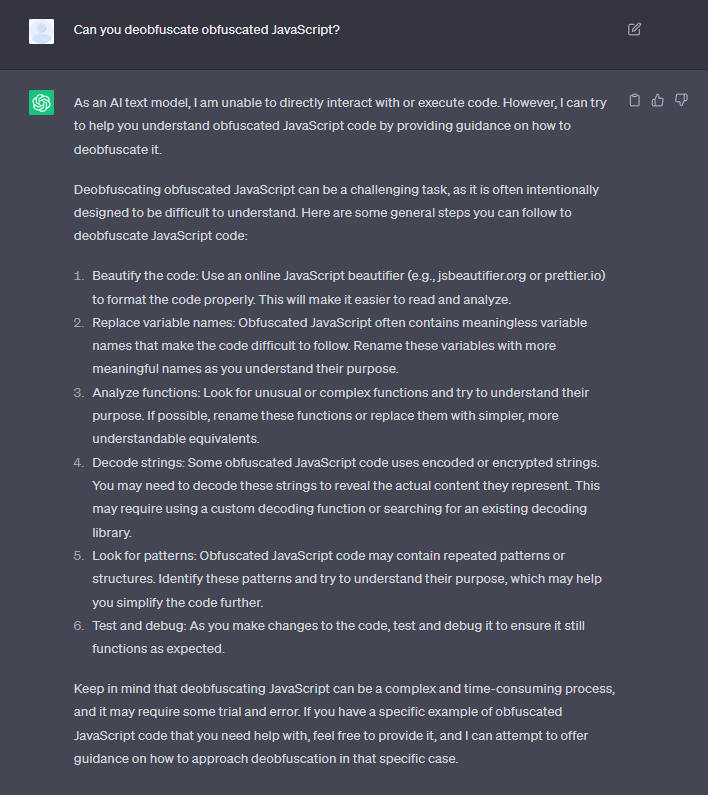

Provides a manual step-by-step process for the user to do;

Sometimes the resulting JavaScript is a completely different program (e.g. input is a program that draws a cube and output is a function to encrypt messages);

Sometimes deletes a considerable part of the JavaScript in the process;

Sometimes it outputs the same code.

The different results seem to be related to the context provided before the request to de-obfuscate the source code is made. For instance, it seems to be more successful in understanding the obfuscated source code in a conversation where the source code was previously provided.

On the other hand, if a conversation with the AI tool is started right away with the obfuscated source code, it’s more likely to lead to failure. Not everything is so easily achieved.

Conclusion

Our tests determined that ChatGPT-4 is a very limited tool as a de-obfuscation solution, showing some limitations in interpretation.

Obfuscation techniques that require programs to run (e.g., CFF, String Concealing) make it impossible for ChatGPT to interpret the source code and produce a simplified version of it. Although we weren't able to run tests on techniques like self-defending or VM-based obfuscation it seems safe to assume that the results wouldn’t be much different.

ChatGPT seems to be a very useful tool for someone looking to understand the process of de-obfuscation/reverse engineering while having a more guided experience.

AI tools and artificial intelligence rise, in general, create new challenges for cybersecurity, with attackers conquering new assets to win more battles, according to an article from The Washington Post.

Jscrambler

The leader in client-side Web security. With Jscrambler, JavaScript applications become self-defensive and capable of detecting and blocking client-side attacks like Magecart.

View All ArticlesMust read next

Beyond Obfuscation: JavaScript Protection and In-Depth Security

JavaScript protection is much more than obfuscation. With resilient obfuscation and runtime protection, you can prevent serious client-side attacks.

June 17, 2020 | By Jscrambler | 5 min read

12 Extremely Useful Hacks for JavaScript

In this post we will share 12 extremely useful hacks for JavaScript. These hacks reduce the code and will help you to run optimized code.

April 28, 2016 | By Caio Ribeiro Pereira | 6 min read

Protecting JavaScript Source Code Using Obfuscation - Facts and Fiction

This will be the very first post in this blog. JScrambler exists since 2010 and it was about time we have a blog.

August 5, 2013 | By Jscrambler | 1 min read