Setting Up 5 Useful Middlewares For An Express API

March 23rd, 2016 | By Caio Ribeiro Pereira | 10 min read

Express API: a guide with tips and tricks to improve the security and performance of a RESTful API. We will create an Express API with only one endpoint to simplify our example. To start, let’s set up our project.

Open the terminal and type the following command:

mkdir my - api

cd my - api

npm init

The npm init shows a quick quiz to set up some descriptions and generate the package.json file. It is the main file we will use to install some modules for our project. Feel free to answer each question in your way.

Let’s now install the Express framework running the following command

npm install express--save

Now we have the Express installed, let’s write our small and simple API code and start creating the index.js

var express = require("express");

var app = express();

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

app.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

To test if everything is ok, type the following command

node index.js

Done that, open the browser at the address: http://localhost:3000/. Now, we have a small and functional API to explore in the sections below some useful middleware to improve the application.

Introduction to CORS

CORS (Cross-origin resource sharing) is a relevant HTTP mechanism. It is responsible for allowing or not asynchronous requests from other domains.

CORS, in practice, are only the HTTP headers included on the server side. Those headers inform which domain can consume the API, which HTTP methods are allowed, and which endpoints can be shared publicly with applications from other domains.

Enabling CORS in the API

As we are developing an API that will serve data for any kind of client-side applications, we need to enable CORS’s middleware for the endpoints to become public. Meaning that some clients can make requests on our API.

To enable it, let’s install and use the module Cors

npm install cors--save

Then, to initiate it, add the middleware app.use(cors())

var express = require("express");

var cors = require("cors");

var app = express();

app.use(cors());

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

app.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

When using only the function cors() the middleware will release full access to our API. However, it is advisable to control which client domains can have access which methods they can use, and which headers must be required for the clients to inform in the request.

In our case let’s set up only three attributes: origin (allowed domains), methods (allowed methods), and allowedHeaders (requested headers).

So, let’s add some parameters inside app.use(cors())

var express = require("express");

var cors = require("cors");

var app = express();

app.use(cors({

origin: ["http://localhost:3001"],

methods: ["GET", "POST"],

allowedHeaders: ["Content-Type", "Authorization"]

}));

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

app.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

Now we have an API that will only allow client apps from the address: http://localhost:3001/. This client application can only request via GET or POST methods and use the headers: Content-Type and Authorization.

A bit more about CORS

For study purposes about CORS, to understand its headers, and most importantly to learn how to customize the rule for your API, I recommend you to read the full documentation.

Generating logs

We are going to set up our application to report and generate log files about the user’s requests. To do this let’s use the module morgan which is a middleware for generating request logs in the server.

To install it type the following command

npm install morgan--save

After that let’s include in the top of the middlewares the function app.Use (morgan("common")) to log all requests

var express = require("express");

var cors = require("cors");

var morgan = require("morgan");

var app = express();

app.use(morgan("common"));

app.use(cors({

origin: ["http://localhost:3001"],

methods: ["GET", "POST"],

allowedHeaders: ["Content-Type", "Authorization"]

}));

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

app.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

To test the log generation, restart the server and access multiple times any API address such as http://localhost:3000/.

After making some requests, take a look at the terminal.

Configuring parallel processing using cluster module

Node.js does not work with multi-threads. This is something that in the opinion of some developers is considered as a negative aspect and that causes a lack of interest in learning and in taking it seriously. However, despite being single-thread, it’s possible to prepare it to work at least with parallel processing. To do this, you can use the native module called cluster.

It basically instantiates new processes of an application working in a distributed way and this module takes care to share the same port network between the active clusters.

The number of processes to be created it’s up to us to decide, but a good practice is to instantiate a number of processes based on the amount of server processor cores or also a relative amount to core x processors.

For example, if I have a single processor of eight cores I can instantiate eight processes creating a network of eight clusters. But if there are four processors of eight cores each, you can create a network of thirty-two clusters in action.

To make sure the clusters work in a distributed and organized way, it is necessary that a parent process exists (also known as cluster master).

Because it is responsible for balancing the parallel processing among the other clusters, distributing this load to the other processes, called child process (or cluster slave). It is very easy to implement this technique on Node.js since all processing distribution is performed as an abstraction to the developer.

Another advantage is that clusters are independent. That is, in case a cluster goes down, the others will keep working. However, it is necessary to manage the instances and the shutdown of clusters manually to ensure the return of the cluster that went down.

Based on these concepts, we are going to apply in practice the implementation of clusters.

Create in the root directory the file clusters.js. See the code below:

var cluster = require("cluster");

var os = require("os");

const CPUS = os.cpus();

if (cluster.isMaster) {

CPUS.forEach(function() {

cluster.fork()

});

cluster.on("listening", function(worker) {

console.log("Cluster %d connected", worker.process.pid);

});

cluster.on("disconnect", function(worker) {

console.log("Cluster %d disconnected", worker.process.pid);

});

cluster.on("exit", function(worker) {

console.log("Cluster %d is dead", worker.process.pid);

// Ensuring a new cluster will start if an old one dies

cluster.fork();

});

} else {

require("./index.js");

}

This time, to run the server, you must run the command

node clusters.js

After executing this command, the application will run distributed into the clusters and to make sure it’s working you will see the message "My API is running..." more than once in the terminal.

Basically, we have to load the module cluster and verify if it is the master cluster via cluster.isMaster variable.

Once you’ve confirmed that the cluster is master a loop will be iterated based on the total of processing cores (CPUs) forking new slave clusters inside the CPUS.forEach(function() { cluster.fork() }) function.

When a new process is created (in this case a child process) it does not fit in the conditional if(cluster.isMaster). So, the application server is started via require("./index.js") for this child process.

Also, some events created by the cluster master are included. In the last example of code, we only used the main events listed below:

listening: this happens when a cluster is listening to a port. In this case, our application is listening to the port 3000;

disconnect: happens when a cluster is disconnected from the cluster’s network;

exit: occurs when a cluster is closed in the OS.

Developing clusters:

A lot of things can be explored about developing clusters on Node.js. Here we only applied a little bit which was enough to run parallel processing. But in case you have to implement more detailed clusters, I recommend you read the documentation

Compacting requests using GZIP middleware

To make requests lighter and load faster, let’s enable another middleware which is going to be responsible for compacting the JSON responses and also the static files which your application will serve to GZIP format, a compatible format to several browsers. We will do this simple but important refactoring just using the module compression.

Let’s install it

npm install compression--save

After that, we have to include its middleware in the index.js file

var express = require("express");

var cors = require("cors");

var morgan = require("morgan");

var compression = require("compression");

var app = express();

app.use(morgan("common"));

app.use(cors({

origin: ["http://localhost:3001"],

methods: ["GET", "POST"],

allowedHeaders: ["Content-Type", "Authorization"]

}));

app.use(compression());

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

app.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

Installing SSL support to use HTTPS

Nowadays, it is required to build a safe application that has a safe connection between the server and the client. To do this, many applications buy and use security certificates to ensure an SSL (Secure Sockets Layer) connection via the HTTPS protocol.

To implement an HTTPS protocol connection, it is necessary to buy a digital certificate for production’s environment usage.

Assuming you have one, put two files (one file uses .key extension and the other is a .cert file) in the project’s root. After that, let’s use the native HTTP module to allow our server to start using HTTPS protocol and the fs module to open and read the certificate files: my-api.key and my-api.cert to be used as credential parameters to start our server in HTTPS mode.

To do this, we are going to replace the function app.listen() with https.createServer(credentials, app).listen() function.

Take a look at the code below for our API

var express = require("express");

var cors = require("cors");

var morgan = require("morgan");

var compression = require("compression");

var fs = require("fs");

var https = require("https");

var app = express();

app.use(morgan("common"));

app.use(cors({

origin: ["http://localhost:3001"],

methods: ["GET", "POST"],

allowedHeaders: ["Content-Type", "Authorization"]

}));

app.use(compression());

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

var credentials = {

key: fs.readFileSync("my-api.key", "utf8"),

cert: fs.readFileSync("my-api.cert", "utf8")

};

https

.createServer(credentials, app)

.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

Congratulations! Now your application is running in a safe protocol, ensuring that the data won’t be intercepted. Note that in a real project, this kind of implementation requires a valid digital certificate, so don’t forget to buy one if you put a serious API in a production environment.

To test these changes, just restart the server and go to https://localhost:3000/

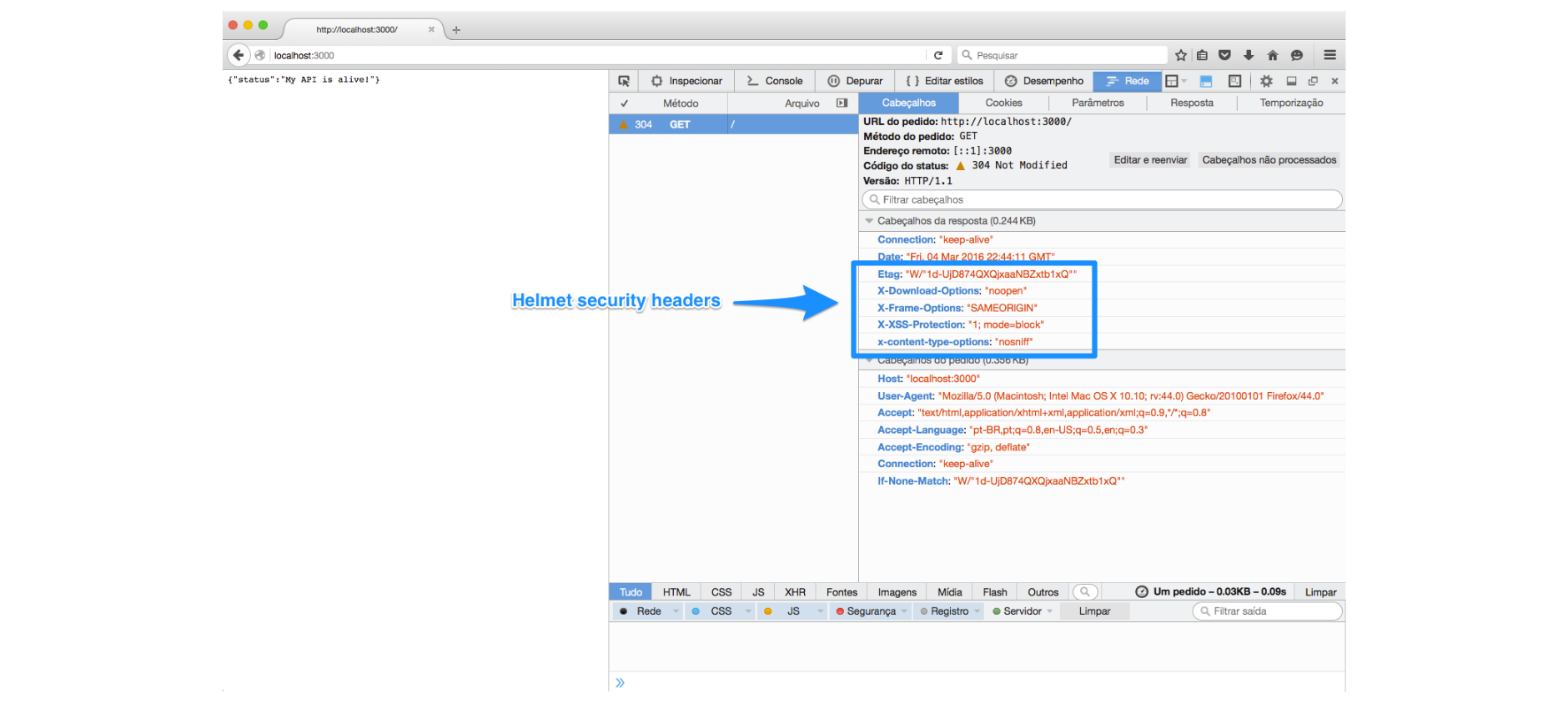

Armoring the API with Helmet

Finishing the development of our API, let’s include a very important module, which is a security middleware that handles several kinds of attacks in the HTTP/HTTPS protocols.

This module is called helmet which is a set of nine internal middlewares, responsible for treating the following HTTP settings:

Configures the Content Security Policy;

Removes the header X-Powered-By that informs the name and the version of a server;

Configures rules for HTTP Public Key Pinning;

Configures rules for HTTP Strict Transport Security;

Treats the header X-Download-Options for Internet Explorer 8+;

Disables the client-side caching;

Prevents sniffing attacks on the client Mime Type;

Prevents ClickJacking attacks;

Protects against XSS (Cross-Site Scripting) attacks.

To sum up, even if you do not understand a lot about HTTP security, you can use helmet modules because, in addition to having a simple interface, it will armor your web application against many types of attacks.

To install it, run the command

npm install helmet--save

To ensure maximum security on our API, we are going to use all middleware provided by the helmet module which can easily be included via the function app.Use (helmet()):

var express = require("express");

var cors = require("cors");

var morgan = require("morgan");

var compression = require("compression");

var fs = require("fs");

var https = require("https");

var helmet = require("helmet");

var app = express();

app.use(morgan("common"));

app.use(helmet());

app.use(cors({

origin: ["http://localhost:3001"],

methods: ["GET", "POST"],

allowedHeaders: ["Content-Type", "Authorization"]

}));

app.use(compression());

app.get("/", function(req, res) {

res.json({

status: "My API is alive!"

});

});

var credentials = {

key: fs.readFileSync("my-api.key", "utf8"),

cert: fs.readFileSync("my-api.cert", "utf8")

};

https

.createServer(credentials, app)

.listen(3000, function() {

console.log("My API is running...");

});

module.exports = app;

Now, restart your application and go to https://localhost:3000/

Open the browser console and in the Networks menu, you can view in detail the GET/request data. There you’ll see new items included in the header, something similar to this image:

Conclusion

Now you have an API that uses some of the best security practices that will protect you against some common attacks. All data will be processed in parallel using clusters and the delivery of the data will be optimized to be served using GZIP compression.

CORS will enable restricted web clients and all requests will be logged via the Morgan module.

Feel free to use this small API as a reference for your new Express project.

Jscrambler

The leader in client-side Web security. With Jscrambler, JavaScript applications become self-defensive and capable of detecting and blocking client-side attacks like Magecart.

View All ArticlesMust read next

Migrate Your Express App to Koa 2.0

Get to know more about migrating your Express App to Koa, a more evolved form of Express whose main feature is the elimination of callbacks.

January 19, 2017 | By Samier Saeed | 8 min read

Documenting APIs using ApiDoc.js

Check out how to create client-side applications using the API through the rules of the API documentation.

June 2, 2016 | By Caio Ribeiro Pereira | 5 min read